Job

script spark-submit => `org.apache.spark.deploy.SparkSubmit'

Job Submit

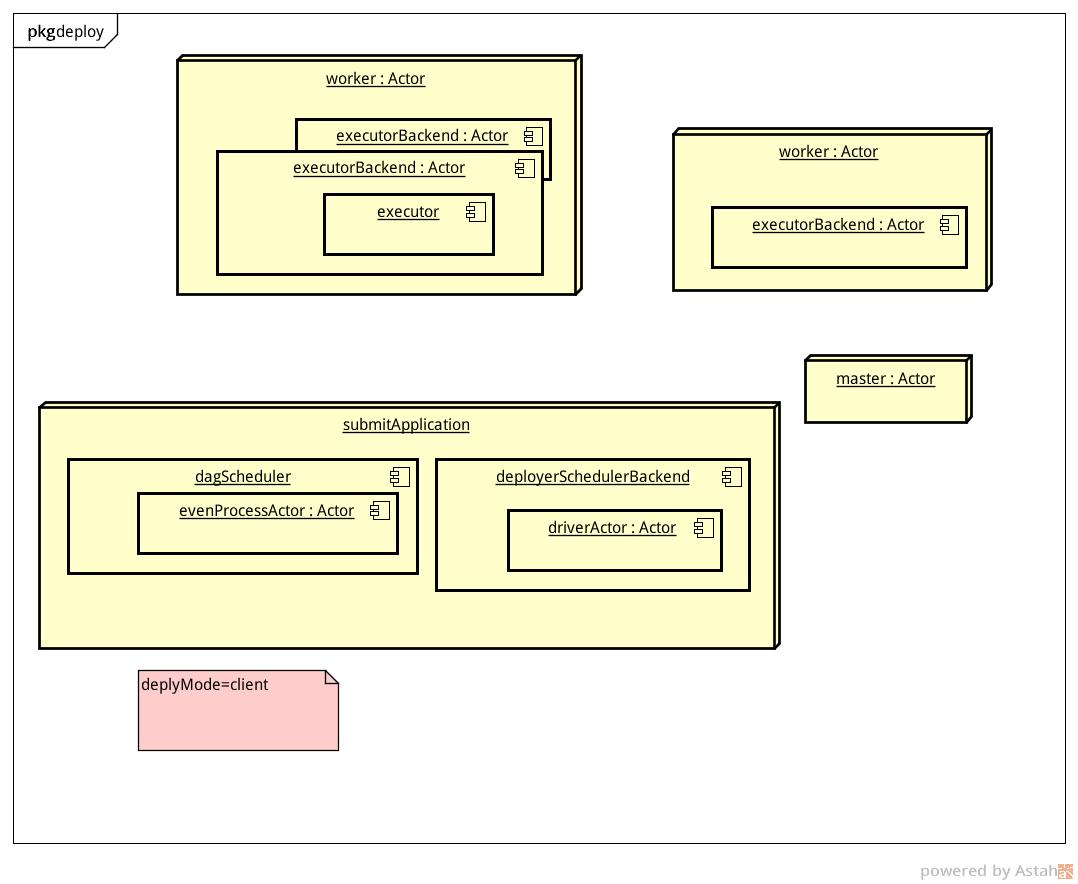

SparkSubmit, 提交应用到master: 当deploymode=cluster时,SparkSubmit 将应用包装成org.apache.spark.deploy.Client

如果deploymode=client直接启动应该的main class

Client创建启动ClientActor,将应用转换为DriverWrapper,然后通知master

Driver的mainClass是DriverWrapper

private class ClientActor(driverArgs: ClientArguments, conf: SparkConf)

extends Actor with ActorLogReceive with Logging {

override def preStart() = {

masterActor = context.actorSelection(Master.toAkkaUrl(driverArgs.master))

driverArgs.cmd match {

case "launch" =>

val mainClass = "org.apache.spark.deploy.worker.DriverWrapper"

masterActor ! RequestSubmitDriver(driverDescription)

master收到通知后,把driver加到waitingDrivers里面,然后schedule,sechdule则是在waitingDrivers里拿一个选一个Worker launch

private[spark] class Master(

host: String,

port: Int,

webUiPort: Int,

val securityMgr: SecurityManager)

extends Actor with ActorLogReceive with Logging {

override def receiveWithLogging = {

case RequestSubmitDriver(description) => {

logInfo("Driver submitted " + description.command.mainClass)

val driver = createDriver(description)

persistenceEngine.addDriver(driver)

waitingDrivers += driver

drivers.add(driver)

schedule()

}

}

def launchDriver(worker: WorkerInfo, driver: DriverInfo) {

logInfo("Launching driver " + driver.id + " on worker " + worker.id)

worker.addDriver(driver)

driver.worker = Some(worker)

worker.actor ! LaunchDriver(driver.id, driver.desc)

driver.state = DriverState.RUNNING

}

在Worker端收到LaunchDriver消息后 Worker.scala

case LaunchDriver(driverId, driverDesc) => {

logInfo(s"Asked to launch driver $driverId")

val driver = new DriverRunner(conf, driverId, workDir, sparkHome, driverDesc, self, akkaUrl)

drivers(driverId) = driver

driver.start()

}

DriverRunner启动一个线程运行DriverWrapper,DriverWrapper启动应用并创建一个WokerWatcher[?] actor

DriverRunner.scala

private[spark] class DriverRunner(...

def start() = {

new Thread("DriverRunner for " + driverId) {

override def run() {

try {

val builder = CommandUtils.buildProcessBuilder(driverDesc.command, driverDesc.mem,

sparkHome.getAbsolutePath, substituteVariables, Seq(localJarFilename))

launchDriver(builder, driverDir, driverDesc.supervise)

}

catch {

case e: Exception => finalException = Some(e)

}

worker ! DriverStateChanged(driverId, state, finalException)

}

}.start()

}

Job Running

RDD的action方法都会调用 SparkContext的runJob方法,按partition返回结果

/**

* Run a function on a given set of partitions in an RDD and return the results as an array. The

* allowLocal flag specifies whether the scheduler can run the computation on the driver rather

* than shipping it out to the cluster, for short actions like first().

*/

def runJob[T, U: ClassTag](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

allowLocal: Boolean

): Array[U] = {

val results = new Array[U](partitions.size)

runJob[T, U](rdd, func, partitions, allowLocal, (index, res) => results(index) = res)

results

}

/**

* Run a function on a given set of partitions in an RDD and pass the results to the given

* handler function. This is the main entry point for all actions in Spark. The allowLocal

* flag specifies whether the scheduler can run the computation on the driver rather than

* shipping it out to the cluster, for short actions like first().

*/

def runJob[T, U: ClassTag](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

allowLocal: Boolean,

resultHandler: (Int, U) => Unit) {

logInfo("Starting job: " + callSite.shortForm)

dagScheduler.runJob(rdd, cleanedFunc, partitions, callSite, allowLocal,

resultHandler, localProperties.get)

}

(index, res) => results(index) = res 意思是对应结果到 index -> result[index]的函数

在waiter.awaitResult等待结果

DAGScheduler

def runJob[T, U: ClassTag](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

callSite: CallSite,

allowLocal: Boolean,

resultHandler: (Int, U) => Unit,

properties: Properties = null)

{

val start = System.nanoTime

val waiter = submitJob(rdd, func, partitions, callSite, allowLocal, resultHandler, properties)

waiter.awaitResult() match {

case JobSucceeded => {

logInfo("Job %d finished: %s, took %f s".format

(waiter.jobId, callSite.shortForm, (System.nanoTime - start) / 1e9))

}

case JobFailed(exception: Exception) =>

logInfo("Job %d failed: %s, took %f s".format

(waiter.jobId, callSite.shortForm, (System.nanoTime - start) / 1e9))

throw exception

}

}

16/02/15 14:28:27 INFO DAGScheduler: Job 0 finished: saveAsTextFile at WordCount.scala:25, took 3.481700 s

在handleTaskCompletion()里通知任务完成 DAGScheduler

private[scheduler] def handleTaskCompletion(event: CompletionEvent) {

event.reason match {

case Success =>

task match {

case rt: ResultTask[_, _] =>

stage.resultOfJob match {

case Some(job) =>

job.listener.taskSucceeded(rt.outputId, event.result)

private[scheduler] class DAGSchedulerEventProcessActor(dagScheduler: DAGScheduler)

extends Actor with Logging {

case completion @ CompletionEvent(task, reason, _, _, taskInfo, taskMetrics) =>

dagScheduler.handleTaskCompletion(completion)

def taskEnded(

task: Task[_],

reason: TaskEndReason,

result: Any,

accumUpdates: Map[Long, Any],

taskInfo: TaskInfo,

taskMetrics: TaskMetrics) {

eventProcessActor ! CompletionEvent(task, reason, result, accumUpdates, taskInfo, taskMetrics)

}

/**

* Submit a job to the job scheduler and get a JobWaiter object back. The JobWaiter object

* can be used to block until the the job finishes executing or can be used to cancel the job.

*/

def submitJob[T, U](

rdd: RDD[T],

func: (TaskContext, Iterator[T]) => U,

partitions: Seq[Int],

callSite: CallSite,

allowLocal: Boolean,

resultHandler: (Int, U) => Unit,

properties: Properties = null): JobWaiter[U] =

{

// Check to make sure we are not launching a task on a partition that does not exist.

val maxPartitions = rdd.partitions.length

partitions.find(p => p >= maxPartitions || p < 0).foreach { p =>

throw new IllegalArgumentException(

"Attempting to access a non-existent partition: " + p + ". " +

"Total number of partitions: " + maxPartitions)

}

val jobId = nextJobId.getAndIncrement()

if (partitions.size == 0) {

return new JobWaiter[U](this, jobId, 0, resultHandler)

}

assert(partitions.size > 0)

val func2 = func.asInstanceOf[(TaskContext, Iterator[_]) => _]

val waiter = new JobWaiter(this, jobId, partitions.size, resultHandler)

eventProcessActor ! JobSubmitted(

jobId, rdd, func2, partitions.toArray, allowLocal, callSite, waiter, properties)

waiter

}

private[scheduler] class DAGSchedulerEventProcessActor(dagScheduler: DAGScheduler)

extends Actor with Logging {

def receive = {

case JobSubmitted(jobId, rdd, func, partitions, allowLocal, callSite, listener, properties) =>

dagScheduler.handleJobSubmitted(jobId, rdd, func, partitions, allowLocal, callSite,

listener, properties)